A data pipeline is a process that moves data from one place to another. Data pipelines can transfer data between stores or process data before storing or using it.

A data pipeline typically involves extracting data from a source, transforming it somehow, and then loading it into a destination. The transformation step may include cleaning or filtering the data, aggregating it, or applying some kind of machine-learning model to it. It can be implemented using various tools and technologies, depending on the needs of the specific use case.

There are many ways to build and configure a data pipeline, and the specific details can vary depending on the tools and technologies used. The pipeline generally consists of a series of steps that extract data from one or more sources, transform the data somehow, and load the transformed data into one or more targets.

Data Pipeline & ETL

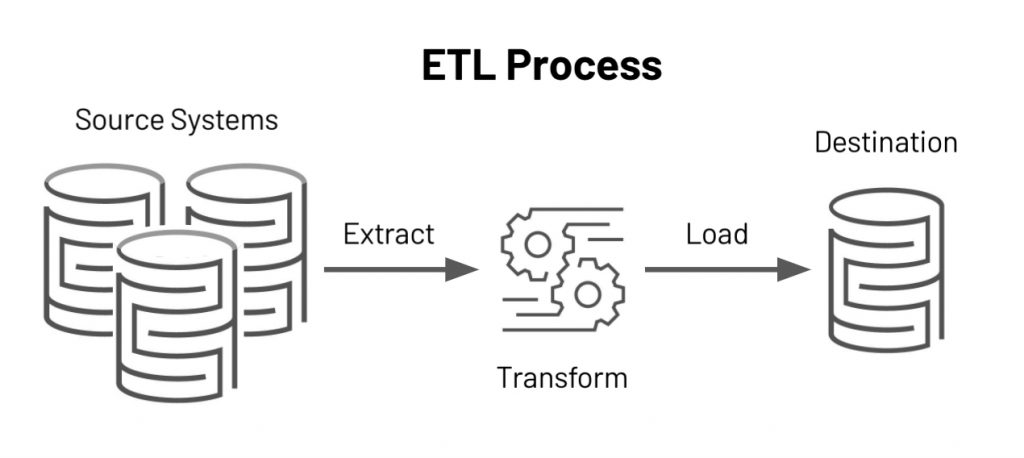

ETL stands for Extract, Transform, and Load. It is a type of data pipeline that moves data from one or more sources to a destination where it can be stored and analyzed.

Here is a general outline of the process:

- Extract: The first step in a data pipeline is to extract data from one or more sources. This could be a database, a file, or another type of data store.

- Transform: Once the data has been extracted, it is often transformed in some way. This could involve cleaning the data, aggregating it, or applying another type of transformation to prepare it for the next pipeline step.

- Load: After the data has been transformed, it is loaded into the target system or data store. This could be a database, a data warehouse, or a storage system.

- Monitor: The data pipeline should be monitored to ensure that it runs smoothly and identify any issues that may arise. This could involve monitoring the pipeline’s performance, monitoring the data to ensure accuracy, and identifying and troubleshooting any errors.

ETL is commonly used to move data between databases, data warehouses, and other storage systems, as well as to and from various types of data processing and analytics systems. ETL pipelines can process large amounts of data in batch mode or move data in real-time streams.

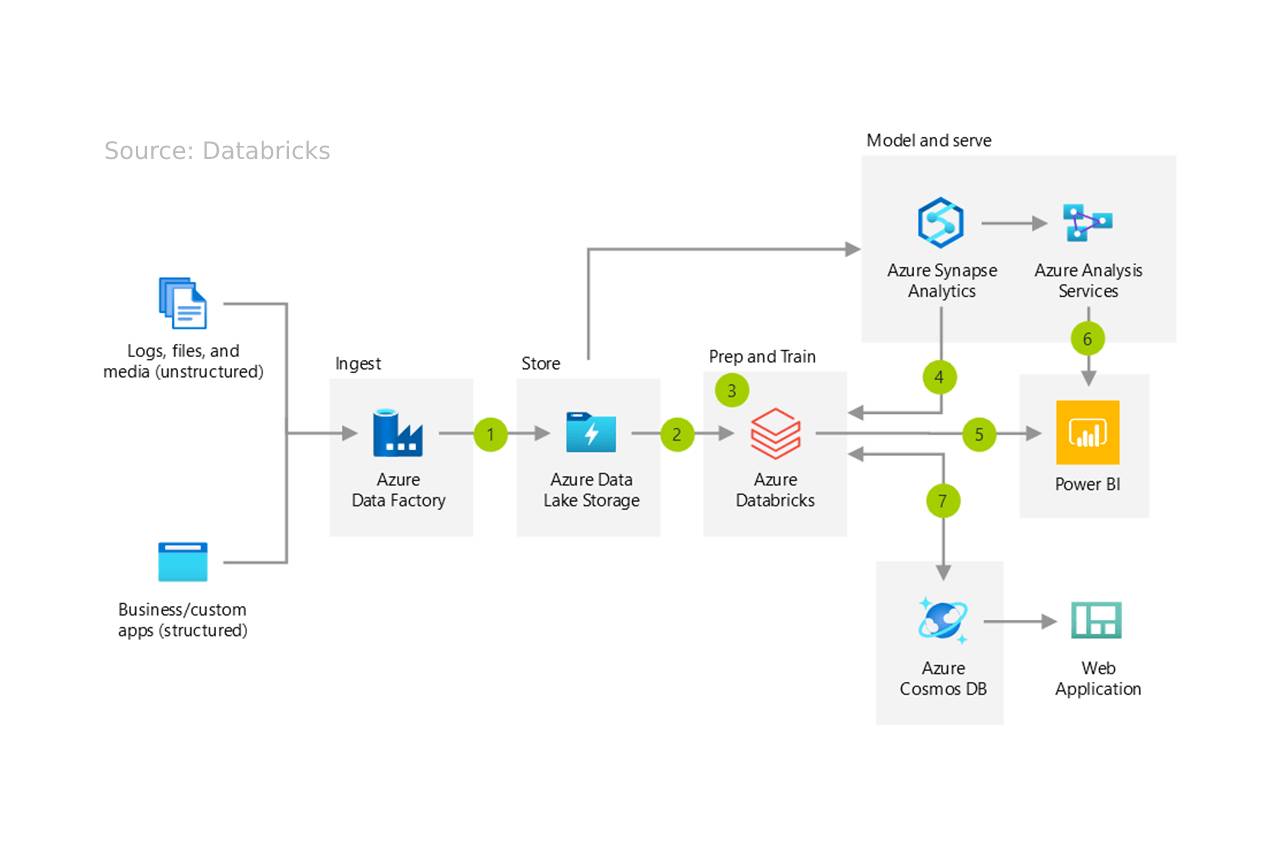

Some data pipelines are designed to move data in real-time streams, while others operate on a batch schedule. Data pipelines can be implemented using various tools and technologies, including custom code, data integration platforms, and cloud-based services.

There are multiple positions in the data science field, but Data Engineer is considered the most technical job profile in data science. The task of creating data pipelines is performed by data engineers.

If you want to get ready for the profiles like data analyst, big data analyst, data engineer, data warehouse manager, BI analyst, or data scientist, you can opt for the Console Flare Data Science Certification program, which can make you ready for these job profiles.